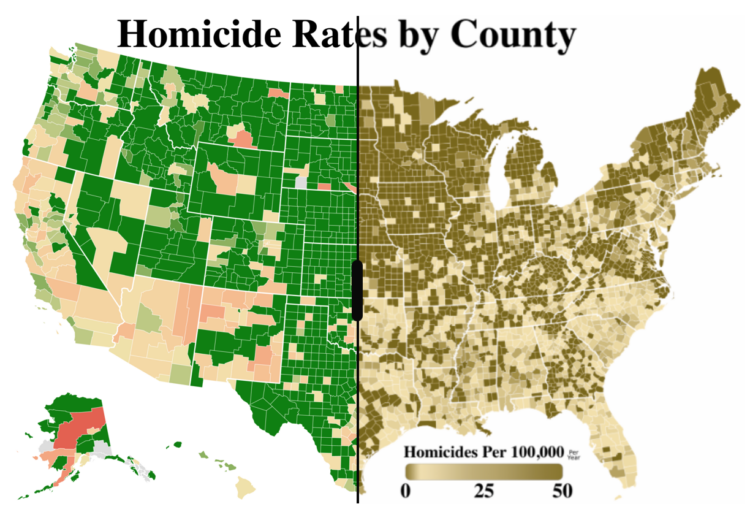

If you don’t use a colorblind-safe color palette in your maps and charts, a significant percentage of people will get nothing out of your work. For The Verge, Andy Baio, who is colorblind, discusses the experience across the web:

Because red and green are complementary colors opposite one another on the color wheel, they’ve become the default colors for every designer who wants to represent opposites: true and false, high and low, stop and go.

Inconveniently, these are also the two colors most likely to be mixed up by people with color vision deficiencies.

I wish every designer in the world understood this and would switch to, say, red and blue for opposing colors. But I know that won’t happen: the cultural meaning is too ingrained.

They used a slider mechanism to show what people with normal vision see and then what Baio sees. I’m usually not into the slider, which often shows a before-and-after view that is meant to highlight contrast. In this case, the views are so different that the contrast works.

Tags: Andy Baio, colorblind, Verge