A few months ago, the press office of the University of California at San Diego issued a press release with a provocative title ...

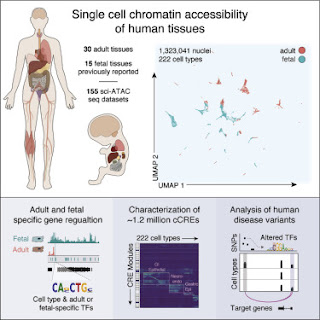

Illuminating Dark Matter in Human DNA - Unprecedented Atlas of the "Book of Life"

The press release was posted on several prominent science websites and Facebook groups. According to the press release, much of the human genome remains mysterious (dark matter) even 20 years after it was sequenced. According to the senior author of the paper, Bing Ren, we still don't understand how genes are expressed and how they might go awry in genetic diseases. He says,

A major reason is that the majority of the human DNA sequence, more than 98 percent, is non-protein-coding, and we do not yet have a genetic code book to unlock the information embedded in these sequences.

We've heard that story before and it's getting very boring. We know that 90% of our genome is junk, about 1% encodes proteins, and another 9% contains lots of functional DNA sequences, including regulatory elements. We've known about regulatory elements for more than 50 years so there's nothing mysterious about that component of noncoding DNA.

Ren and his colleagues are trying to identify regulatory elements by looking at transcription factor binding sites and their associated chromatin alterations. If this sounds familiar, it's because scientists have been mapping these sites for at least 25 years.

Efforts to fill in the blanks are broadly captured in an ongoing international effort called the Encyclopedia of DNA Elements (ENCODE), and include the work of Ren and colleagues. In particular, they have investigated the role and function of chromatin, a complex of DNA and proteins that form chromosomes within the nuclei of eukaryotic cells.

Back in 2007 and 2012, ENCODE started mapping all the spurious transcription factor binding sites in the human genome. They also mapped all the spurious transcripts that are due to nonfunctional transcription and all the open chromatin domains associated with those sites. There are millions of these sites and most of them have nothing to do with normal gene expression and biological function.

The paper being promoted by the press release (Zhang et al., 2021) continues this work by mapping more spurious transcription factor binding sites. A tiny percentage of these might be genuine cis-regulatory elements (cCREs).

They applied assays to more than 600,000 human cells sampled from 30 adult human tissue types from multiple donors, then integrated that information with similar data from 15 fetal tissue types to reveal the status of chromatin at approximately 1.2 million candidate cis-regulatory elements in 222 distinct cell types.

The paper reports a total of 1,154,661 "distinct cCREs" spanning 14.8% of the genome. In typical fashion, there's no mention of spurious binding sites and very little attempt to identify real regulatory sites. As usual, all the sites are called cis-regulatory elements even though the authors must surely know that most of them are NOT regulatory elements. Most of that 14.8% of the genome is junk DNA but the authors forget to mention that possibility. I'm sure it just slipped their mind because they must know about spurious sites after all the controversy over earlier ENCODE results dating back to 2007.

Much of the emphasis in the paper is on the possible association of these sites with various diseases. Here's what they say in the introduction.

Genome-wide association studies (GWAS) have identified hundreds of thousands of genetic variants associated with a broad spectrum of human traits and diseases. The large majority of these variants are noncoding.

That's an interesting piece of information. If true, it means that there are at least 200,000 "genetic variants" or about eight per gene. Most of them will be in junk DNA and will have nothing to do with any nearby genes. They just happen to be linked to those genes. The authors identified 527 transcription factor binding sites that might possibly be associated with genetic variants that could be influencing nearby genes. Two of them look promising; one is associated with ulcerative colitis and one is associated with osteoarthritis. That's two out of 1.2 million.

I think this is what they mean when they say they're illuminating the dark matter of the genome.

Nobody questions the usefullness of GWAS in mapping genes to phenotypes, including diseases. Nobody doubts that some of these genetic association studies will actually reveal the genetic cause of the phenotypic change, as opposed to fortuitous associations with linked polymorphisms. Nobody is surprised that most of these genetic markers lie outside of protein-coding regions. What surprises me is that the benefits of these mapping experiments aren't presented in a context that recognizes the limitations and the difficulties in interpreting the data. In this case, why not discuss the fact that the vast majority of these so-called cCREs are likely to be spurious transcription factor binding sites that have nothing to do with gene regulation or disease? This means that the problem of identifying a few functional promoters amid a sea of irrelevant features is very difficult. Why not say that?

I struggle to come up with an explanation for this behavior. Is it because the authors know about spurious binding sites but don't want to complicate their paper by mentioning them? Or is it because the authors don't really understand junk DNA and spurious binding sites? And how do you explain the reviewers who approve these papers for publication?

Zhang, K., Hocker, J.D., Miller, M., Wang, A., Preisel, S. and Ren, B. (2021) A single-cell atlas of chromatin accessibility in the human genome. Cell 184: 5985-6001 e5919 doi: 10.1016/j.cell.2021.10.024