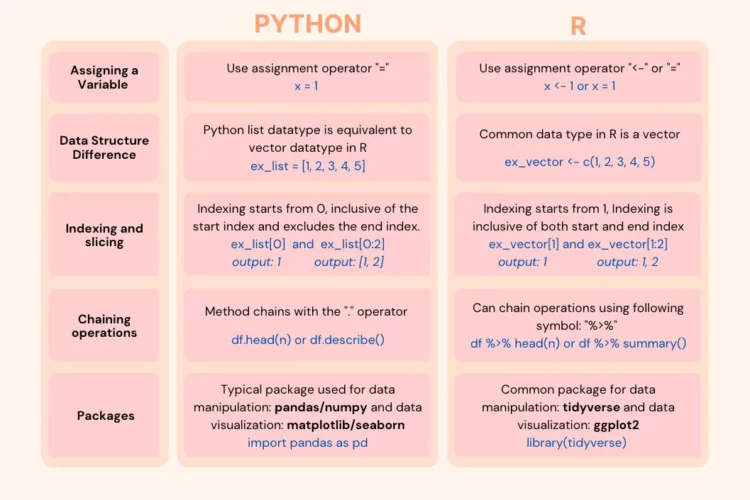

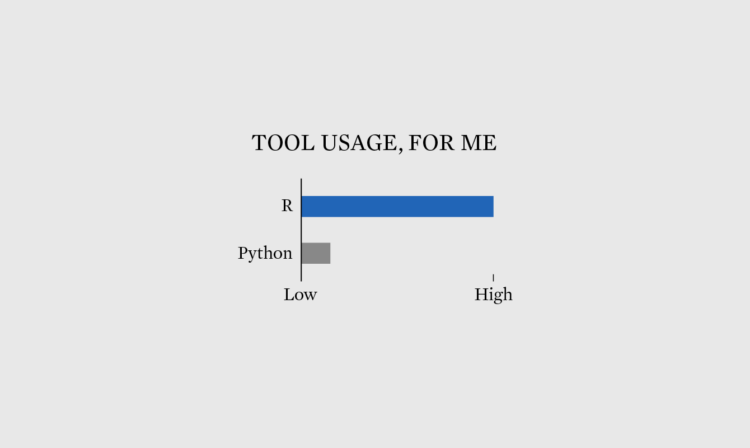

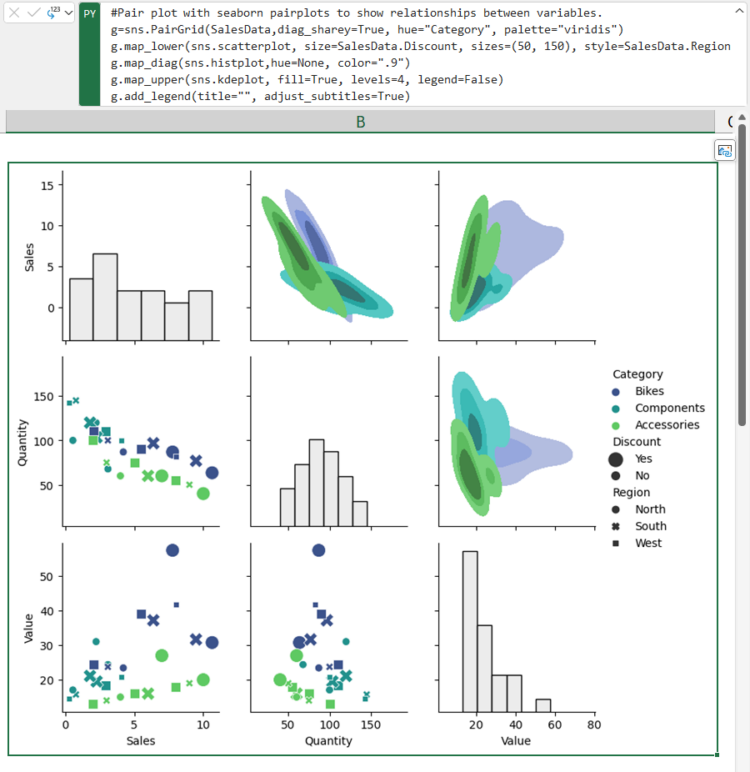

Welcome to The Process, where we look closer at how the charts get made. This is issue #256. I’m Nathan Yau. The tool debate between whether to use R or Python for data visualization, and data analysis in general, is a useless one. Because R is clearly better. (I’m kidding.)

Become a member for access to this — plus tutorials, courses, and guides.

An Introduction to Statistical Learning, with Applications in R by Gareth James, Daniela Witten, Trevor Hastie, and Rob Tibshirani was

An Introduction to Statistical Learning, with Applications in R by Gareth James, Daniela Witten, Trevor Hastie, and Rob Tibshirani was