At the end of 2013, we presented DEB submission and award portfolio data examining the initial results of the preliminary proposal process, leading to DEB’s FY2013 awards. In this post, we provide a follow-up for the second round of awards funded under the preliminary proposal system in FY2014.

For a refresher, you can visit last year’s post.

The main takeaway from the 2014 data is that the following aspects of our portfolio do not exhibit changes associated with new system.

FY2014 Summary Numbers

In FY2014, DEB awarded 136 core program projects (comprised of 197 separate awards). The numbers and charts below all reflect counts of projects.

These projects were selected from 520 full proposals reviewed in DEB during October and November of 2013 by invitation under the Core Programs and LTREB solicitations, via the CAREER, OPUS, and RCN solicitations, or as co-review with another NSF program. The invited proposals had been selected from among 1629 preliminary proposals initially submitted in January of 2013.

Below, we present and discuss charts tracking the trends for several dimensions of our project demographics that were raised as concerns coming in to the preliminary proposal system. The numbers are presented as proportions for comparison across the review stages. However, the progressive winnowing of total numbers from preliminary proposals to awards means each step becomes more sensitive to small absolute changes.

In all cases of award trends shown below, the absolute change from FY2013 to FY2014 was no more than 10 projects.

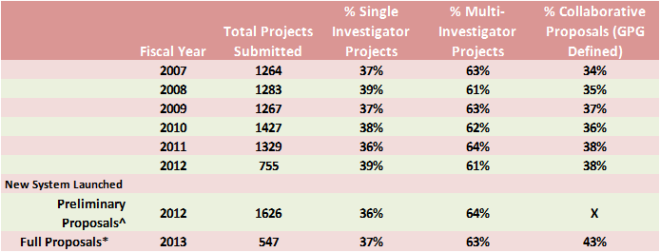

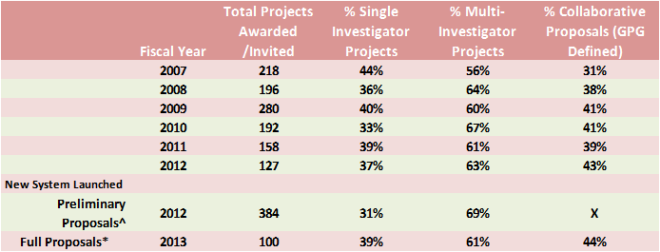

Individual and Collaborative Projects

As seen in the figure below, there was little year-to-year change in the performance of single investigator projects, the small change being consistent with prior inter-annual variation. Most of the apparent jump in the proportion of single investigator awards between the preliminary proposal and full proposal stages is an artifact of the counting method. As we discussed last year, the primarily single-investigator proposals in the CAREER and OPUS categories are not subject to the preliminary proposal screen and thus they make up a relatively larger portion of the full proposals than in years prior to the system and their absence depresses the single investigator proportion of the preliminary proposal counts relative to the historical full proposal baseline.

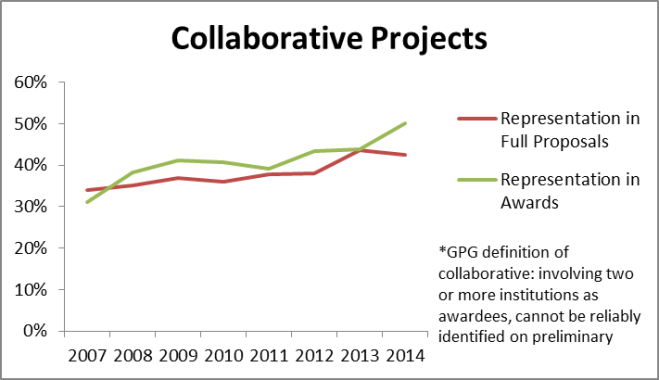

Growth in the proportion of collaborative proposals in our award portfolio continues the generally upward trend from the past several years. We would expect a plateau at some point, but where that might be isn’t clear.

Readers may notice that the year-to-year increase in collaborative project awards for FY2014 is a few percentage points larger than the decrease in single investigator awards shown above. This difference reflects an increase in multi-institutional teams (which meet the NSF definition of “collaborative”) relative to intra-institutional arrangements (intellectual collaboration to be sure, but not a collaborative project).

Gender & Predominantly Undergraduate Institution (PUI) Status

Female PIs experienced a sizeable year to year drop in their proportion of awards this year, although the proportion of submissions at both preliminary and full proposal stages continues to increase. Such a drop is visually jarring, but not unprecedented. In absolute terms, this is a difference of eight projects across four clusters each with 1 or 2 full proposal review panels, essentially noise in the signal.

In contrast, PUIs experienced a large proportional increase in awards this year. Once again this is presumably due to noise within the programs’ decision-making (a difference of only 9 awards) since submissions did not change appreciably.

These single year changes in PUIs and female PIs appear to emerge from the full proposal review and program decision-making stage, not the preliminary proposal stage. This would seem to be a product of PO portfolio management, and such swings an inevitable result of the numerous dimensions of a “balanced portfolio” that need to be tended with a relatively small number of awards.

Early Career Scientists

As we discussed in the FY2013 Wrap-up, there are several imperfect metrics of early career investigator performance, with the “Beginning Investigator” check-box on the cover page being the most immediately visible but also the most inconsistently applied identifier.

By the check-box identifier, beginning investigators continue to receive awards in proportion to full proposal submissions. A gap between preliminary and full proposal submission is expected because of the influx of proposals from the CAREER, OPUS, and RCN mechanisms which tend to have lower rates of beginning investigator PIs in DEB. The proportion of checked boxes at the preliminary proposal stage may also be elevated since the box is commonly, but incorrectly, checked in reference to persons other than the PI and at the preliminary proposal stage that could include persons from non-lead collaborator institutions.

By the check-box identifier, beginning investigators continue to receive awards in proportion to full proposal submissions. A gap between preliminary and full proposal submission is expected because of the influx of proposals from the CAREER, OPUS, and RCN mechanisms which tend to have lower rates of beginning investigator PIs in DEB. The proportion of checked boxes at the preliminary proposal stage may also be elevated since the box is commonly, but incorrectly, checked in reference to persons other than the PI and at the preliminary proposal stage that could include persons from non-lead collaborator institutions.

The other identifier of career stage is the years since PhD of the PI.

With “Early-career” < 10 year post-PhD, “Mid-career” as 10 – 20 years post-PhD, and “Advanced-career” as >20 years, we can give a broader and more accurate overview of the PI population.

From 2013 to 2014, the proportion of submissions decreased slightly for Early-career PIs (-2 percentage points), increased for Mid-career PIs (+6 pts) and decreased for Advanced-career PIs (-4 pts). Even with these changes, the Early-career cohort still represents the largest portion of submissions at 39%.

With respect to awardees, the PI profile shifted prominently toward Mid-career PIs from 2013 to 2014. That cohort increased by 10 pts to 35% of awards, which matches their submission rate. Advanced-career PIs dropped 3 pts, and make up the smallest portion of the award portfolio (32%) but their proportion of awards is still above submission levels. Early-career PIs represented a smaller portion of the 2014 awards (- 7 pts from 2013), and were somewhat underrepresented compared to submissions, constituting the remaining 33% of awards.

The changes in the awardee degree age profile from 2013 to 2014 resulted in a more even distribution between the three categories of Early-, Mid-, and Advanced-career but greater departures from their respective representation in submissions. However, it remains to be determined what distribution represents the “optimal” structure of the awardee population, or even on what criteria to judge optimality.

Success Rate

| Success Rate | |||||||||

| Fiscal Year | 2007 | 2008 | 2009 | 2010 | 2011 | 2012 | 2012/2013 | 2013/2014 | 2014/2015 |

| Preliminary Proposal* | 22.0% | 22.4% | 23.0% | ||||||

| Full Proposal** | 17.2% | 15.3% | 22.1% | 13.5% | 11.9% | 16.8% | 24.1% | 26.2% | N/A, awaiting budget |

| Overall*** | 17.2% | 15.3% | 22.1% | 13.5% | 11.9% | 16.8% | 7.3% | 7.6% | N/A, awaiting budget |

| *= Ninvited_full / Nsubmitted_preliminary | |||||||||

| **= Nawarded / (Ninvited_full + Ndirect_submission^) | |||||||||

| ***= Nawarded / (Nsubmitted_preliminary + Ndirect_submission^) | |||||||||

| ^Ndirect_submission = all proposals through 2012, after 2012 only CAREER, OPUS, RCN, co-review, and LTREB renewals taken to panel. | |||||||||

As we noted last year, we don’t put much value on “success rate” as a metric of program performance because it is driven by factors exogenous to DEB: the budget passed by Congress and the number and size of submissions sent in by the research community. However, we recognize its importance to you as a signal of program health and accessibility. In that regard, we are breathing a slight sigh of relief for the first departure, outside of extraordinary circumstances, from the downward slide that has dominated the past decade.