See Chapter 3: Repetitive DNA and Mobile Genetic Elements

Category Archives: Genome

90% of your genome is junk

If you are interested in learning more about junk DNA here's some links to relevant information.

Prologue Chapter 1: Introducing Genomes Chapter 2: The Evolution of Sloppy Genomes Chapter 3: Repetitive DNA and Mobile Genetic ElementsA small crustacean with a very big genome

The antarctic krill genome is the largest animal genome sequenced to date.

Antarctic krill (Euphausia superba) is a species of small crustacean (about 6 cm long) that lives in large swarms in the seas around Antarctica. It is one of the most abundant animals on the planet in terms of biomass and numbers of individuals.

It was known to have a large genome with abundant repetitive DNA sequences making assembly of a complete genome very difficult. Recent technological advances have made it possible to sequence very long fragments of DNA that span many of the repetitive regions and allow assembly of a complete genome (Shao et al. 2023).

The project involved 28 scientists from China (mostly), Australia, Denmark, and Italy. To give you an idea of the effort involved, they listed the sequencing data that was collected: 3.06 terabases (Tb) PacBio long read sequences, 734.99 Gb PacBio circular consensus sequences, 4.01 Tb short reads, and 11.38 Tb Hi-C reads. The assembled genome is 48.1 Gb, which is considerably larger than that of the African lungfish (40 Gb), which up until now was the largest fully sequenced animal genome.

The current draft has 28,834 protein-coding genes and an unknown number of noncoding genes. About 92% of the genome is repetitive DNA that's mostly transposon-related sequences. However, there is an unusual amount of highly repetitive DNA organized as long tandem repeats and this made the assembly of the complete genome quite challenging.

The protein-coding genes in the Antarctic krill are longer than in other species due to the insertion of repetitive DNA into introns but the increase in intron size is less than expected from studies of other large genomes such as lungfish and Mexican axolotl. It looks like more of the genome expansion has occurred in the intergenic DNA compared to these other species.

This study supports the idea that genome expansion is mostly due to the insertion and propagation of repetitive DNA sequences. Some of us think that the repetitive DNA is mostly junk DNA but in this case it seems unusual that there would be so much junk in the genome of a species with such a huge population size (about 350 trillion individuals). The authors were aware of this problem but they were able to calculate an effective population size because they had sequence data from different individuals all around Antarctica. The effective population size (Ne) turned out to be one billion times smaller than the census population size indicating that the population of krill had been much smaller in the recent past. Their data suggests strongly that this smaller population existed only 10 million years ago.

The authors don't mention junk DNA. They seem to favor the idea that large genomes are associated with crustaceans that live in polar regions and that large genomes may confer a selective advantage.

Shao, C., Sun, S., Liu, K., Wang, J., Li, S., Liu, Q., Deagle, B.E., Seim, I., Biscontin, A., Wang, Q. et al. (2023) The enormous repetitive Antarctic krill genome reveals environmental adaptations and population insights. Cell 186:1-16. [doi: 10.1016/j.cell.2023.02.005]

New & Improved NCBI Datasets Genome and Assembly Pages

Posted by in assembly, Comparative Genomics Resource (CGR), Datasets, Genome, NCBI Taxonomy, What's New

How Intelligent Design Creationists try to deal with the similarity between human and chimp genomes

The initial measurement of the difference between the human and chimp genomes was based on aligning 2.4 billion base pairs in the two genomes. This gave a difference of 1.23% by counting base pair substitutions and small deletions and insertions (indels). However, if you look at larger indels, including genes, you can come up with bigger values because you can count the total number of base pairs in each indel; for example, a deletion of 1,000 bp will be equivalent to 1,000 SNPs.

Read more »Posted by in Genome, Rationalism v Superstition

David Allis (1951 – 2023) and the “histone code”

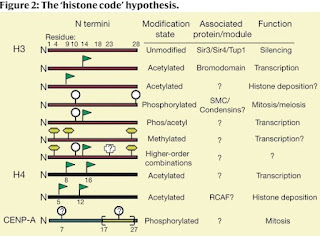

C. David Allis died on January 8, 2023. You can read about his history of awards and accomplishments in the Nature obituary with the provocative subtitle Biologist who revolutionized the chromatin and gene-expression field. This refers to his work on histone acetyltransferases (HATs) and his ideas about the histone code.

The key paper on the histone code is,

Strahl, B. D., and Allis, C. D. (2000) The language of covalent histone modifications. Nature, 403:41-45. [doi: 10.1038/47412]

Histone proteins and the nucleosomes they form with DNA are the fundamental building blocks of eukaryotic chromatin. A diverse array of post-translational modifications that often occur on tail domains of these proteins has been well documented. Although the function of these highly conserved modifications has remained elusive, converging biochemical and genetic evidence suggests functions in several chromatin-based processes. We propose that distinct histone modifications, on one or more tails, act sequentially or in combination to form a ‘histone code’ that is, read by other proteins to bring about distinct downstream events.

They are proposing that the various modifications of histone proteins can be read as a sort of code that's recognized by other factors that bind to nucleosomes and regulation gene expression.

This is an important contribution to our understanding of the relationship between chromatin structure and gene expression. Nobody doubts that transcription is associated with an open form of chromatin that correlates with demethylation of DNA and covalent modifications of histone and nobody doubts that there are proteins that recognize modified histones. However, the key question is what comes first; the binding of transcription factors followed by changes to the DNA and histones, or do the changes to DNA and histones open the chromatin so that transcription factors can bind? These two models are referred to as the histone code model and the recruitment model.

Strahl and Allis did not address this controversy in their original paper; instead, they concentrated on what happens after histones become modified. That's what they mean by "downstream events." Unfortunately, the histone code model has been appropriated by the epigenetics cult and they do not distinguish between cause and effect. For example,

The “histone code” is a hypothesis which states that DNA transcription is largely regulated by post-translational modifications to these histone proteins. Through these mechanisms, a person’s phenotype can change without changing their underlying genetic makeup, controlling gene expression. (Shahid et al. (2022)

The language used by fans of epigenetics strongly implies that it's the modification of DNA and histones that is the primary event in regulating gene expression and not the sequence of DNA. The recruitment model states that regulation is primarily due to the binding of transcription factors to specific DNA sequences that control regulation and then lead to the epiphenomenon of DNA and histone modification.

The unauthorized expropriation of the histone code hypothesis should not be allowed to diminish the contribution of David Allis.

Posted by in Genes, Genome, regulation

How big is the human genome (2023)?

There are several different ways to describe the human genome but the most common one focuses on the DNA content of the nucleus in eukaryotes; it does not include mitochondrial and chloroplast DNA . The standard reference genome sequence consists of one copy of each of the 22 autosomes plus one copy of the X chromosome and one copy of the Y chromosome. That's the definition of genome that I will use here.

The earliest direct estimates of the size of human genome relied on Feulgen staining. The stain is quantitative so a properly conducted procedure gives you the weight of DNA in the nucleus. According to these measurements, the standard diploid content of the human nucleus is 7.00 pg and the haploid content is 3.50 pg [See Ryan Gregory's Animal Genome Size Database].

Since the structure of DNA is known, we can estimate the average mass of a base pair. It is 650 daltons, or 1086 x 10-24 g/bp. The size of the human genome in base pairs can be calculated by dividing the total mass of the haploid genome by the average mass of a base pair.

3.5 pg/1086 x 10-12 pg/bp = 3.2 x 109 bp

The textbooks settled on this value of 3.2 Gb by the late 1960s since it was confirmed by reassociation kinetics. According to C0t analysis results from that time, roughly 10% of the genome consists of highly repetitive DNA, 25-30% is moderately repetitive and the rest is unique sequence DNA (Britten and Kohne, 1968).

A study by Morton (1991) looked at all of the estimates of genome size that had been published to date and concluded that the average size of the haploid genome in females is 3,227 Mb. This includes a complete set of autosomes and one X chromosome. The sum of autosomes plus a Y chromosome comes to 3,122 Mb. The average is about 3,200 which was similar to most estimates.

These estimates mean that the standard reference genome should be more than 3,227 Mb since it has to include all of the autosomes plus an X and a Y chromosome. The Y chromosome is about 60 Mb giving a total estimate of 3,287 Mb or 3.29 Gb.

The standard reference genome

The common assumption about the size of the human genome in the past two decades has dropped to about 3,000 Mb because the draft sequence of the human genome came in at 2,800 Mb and the so-called "finished" sequence was still considerably less than 3,200 Mb. Most people didn't realize that there were significant gaps in the draft sequence and in the "finished" sequence so the actual size is larger than the amount of sequence. The latest estimate of the size of the human genome from the Genome Reference Consortium is 3,099,441038 bp (3,099 Mb) (Build 38, patch 14 = GRCh38.p14 (February, 2022)). This includes an actual sequence of 2,948,318,359 bp and an estimate of the size of the remaining gaps. The total size estimates have been steadily dropping from >3.2 Gb to just under 3.1 Gb.

The telomere-to-telomere assembly

The first complete sequence of a human genome was published in April, 2022 [The complete human genome sequence (2022)]. This telomere-telomere (T2T) assembly of every autosome and one X chromosome came in at 3,055 Mb (3.06 Gb). If you add in the Y chromosome, it comes to 3.12 Gb, which is very similar to the estimate for GRCh38.p14 (3.10 Gb). Based on all the available data, I think it's safe to say that the size of the human genome is about 3.1 Gb and not the 3.2 Gb that we've been using up until now.

Variations in genome size

Everything comes with a caveat and human genome size is no exception. The actual size of your human genome may be different than mine and different from everyone else's, including your close relatives. This is because of the presence or absence of segmental duplications that can change the size a human genome by as much as 200 Mb. It's possible to have a genome that's smaller than 3.0 Gb or one that's larger than 3.3 Gb without affecting fitness.

Nobody has figured out a good way to incorporate this genetic variation data into the standard reference genome by creating a sort of pan genome such as those we see in bacteria. The problem is that more and more examples of segmental duplications (and deletions) are being discovered every year so annotating those changes is a nightmare. In fact, it's a major challenge just to reconcile the latest telomere-to-telomere sequence (T2T-CHM13) and the current standard reference genome [What do we do with two different human genome reference sequences?].

[Image Credit: Wikipedia: Creative Commons Attribution 2.0 Generic license]

Britten, R. and Kohne, D. (1968) Repeated Sequences in DNA. Science 161:529-540. [doi: 10.1126/science.161.3841.529]

Morton, N.E. (1991) Parameters of the Human Genome. Proc. Natl. Acad. Sci. (USA) 88:7474-7476 [free article on PubMed Central]

International Human Genome Sequencing Consortium (2004) Finishing the euchromatic sequence of the human genome. Nature 431:931-945 [doi:10.1038/nature03001]

The function wars are over

In order to have a productive discussion about junk DNA we needed to agree on how to define "function" and "junk." Disagreements over the definitions spawned the Function Wars that became intense over the past decade. That war is over and now it's time to move beyond nitpicking about terminology.

The idea that most of the human genome is composed of junk DNA arose gradually in the late 1960s and early 1970s. The concept was based on a lot of evidence dating back to the 1940s and it gained support with the discovery of massive amounts of repetitive DNA.

Various classes of functional DNA were known back then including: regulatory sequences, protein-coding genes, noncoding genes, centromeres, and origins of replication. Other categories have been added since then but the total amount of functional DNA was not thought to be more than 10% of the genome. This was confirmed with the publication of the human genome sequence.

From the very beginning, the distinction between functional DNA and junk DNA was based on evolutionary principles. Functional DNA was the product of natural selection and junk DNA was not constrained by selection. The genetic load argument was a key feature of Susumu Ohno's conclusion that 90% of our genome is junk (Ohno, 1972a; Ohno, 1972b).

Read more »Sequencing both copies of your diploid genome

New techniques are being developed to obtain the complete sequences of both copies (maternal and paternal) of a typical diploid individual.

The first two sequences of the human genome were published twenty years ago by the International Human Genome Project and by a company called Celera Genomics. The published sequences were a consensus using DNA from multiple indivduals so the final result didn't represent the sequence of any one person. Furthermore, since each of us has inherited separate genomes from our mother and father, our DNA is actually a mixture of two different haploid genomes. Most published genome sequences are an average of these two separate genomes where the choice of nucleotide at any one position is arbitrary.

The first person to have a complete genome sequence was James Watson in 2007 but that was a composite genome sequence. Craig Venter's genome sequence was published a few months later and it was the first complete genome sequence containing separate sequences of each of his 46 chromosomes. (One chromosome from each of his parents.) In today's language, we refer to this as a diploid sequence.

The current reference sequence is based on the data published by the public consortium (International Humand Genome Project)—nobody cares about the Celera sequence. Over the years, more and more sequencing data has been published and this has been incorporated into the standard human reference genome in order to close most gaps and improve the accuracy. The current version is called GRCh38.p14 from February 3, 2022. It's only 95% complete because it's missing large stretches of repetitive DNA, especially in the centromere regions and at the ends of each chromosome (telomeric region).

The important point for this discussion is that CRCh38 is not representative of the genomes of most people on Earth because there has been a bias in favor of sequencing European genomes. (Some variants are annotated in the reference genome but this can't continue.) Many scientists are interested the different kinds of variants present in the human population so they would like to create databases of genomes from diverse populations.

The first complete, telomere-to-telomere (T2T), human genome sequence was published last year [A complete human genome sequence (2022). It was made possible by advances in sequencing technology that generated long reads of 10,000 bp and ultra-long reads of up to 1,000,000 bp [Telomere-to-telomere sequencing of a complete human genome]. The DNA is from a CHM13 cell line that has identical copies of each chromosome so there's no ambiguity due to differences in the maternal and paternal copies. The full name of this sequence is CHM13-T2T.

The two genomes (CRCh38 and CHM13) can't be easily merged so right now there are competing reference genomes [What do we do with two different human genome reference sequences?].

The techniques used to sequence the CHM13 genome make it possible to routinely obtain diploid genome sequences from a large number of individuals because overlapping long reads can link markers on the same chromosome and distinguish between the maternal and paternal chromosomes. However, in practice, the error rate of long read sequencing made assembly of separate chromosomes quite difficult. Recent advances in the accuracy of long read sequencing have been developed by PacBio, and this high fidelity sequencing (PacBio HiFi sequencing) promises to change the game.

The Human Pangene Reference Consortium has tackled the problem by sequencing the genome of an Ashkenazi man (HG002) and his parents (HG002-father and HG004-mother) using the latest sequencing techniques. They then asked the genome community to submit their assemblies using their best software in a kind of "assembly bakeoff." They got 23 responses.

Jarvis, E. D., Formenti, G., Rhie, A., Guarracino, A., Yang, C., Wood, J., et al. (2022) Semi-automated assembly of high-quality diploid human reference genomes. Nature, 611:519-531. [doi: 10.1038/s41586-022-05325-5]

The current human reference genome, GRCh38, represents over 20 years of effort to generate a high-quality assembly, which has benefitted society. However, it still has many gaps and errors, and does not represent a biological genome as it is a blend of multiple individuals. Recently, a high-quality telomere-to-telomere reference, CHM13, was generated with the latest long-read technologies, but it was derived from a hydatidiform mole cell line with a nearly homozygous genome. To address these limitations, the Human Pangenome Reference Consortium formed with the goal of creating high-quality, cost-effective, diploid genome assemblies for a pangenome reference that represents human genetic diversity. Here, in our first scientific report, we determined which combination of current genome sequencing and assembly approaches yield the most complete and accurate diploid genome assembly with minimal manual curation. Approaches that used highly accurate long reads and parent–child data with graph-based haplotype phasing during assembly outperformed those that did not. Developing a combination of the top-performing methods, we generated our first high-quality diploid reference assembly, containing only approximately four gaps per chromosome on average, with most chromosomes within ±1% of the length of CHM13. Nearly 48% of protein-coding genes have non-synonymous amino acid changes between haplotypes, and centromeric regions showed the highest diversity. Our findings serve as a foundation for assembling near-complete diploid human genomes at scale for a pangenome reference to capture global genetic variation from single nucleotides to structural rearrangements.

We don't need to get into all the details but there are a few observations of interest.

- All of the attempted assemblies were reasonably good but the best ones had to make use of the parental genomes to resolve discrepancies.

- Some assemblies began by separating the HG002 (child) sequences into two separate groups based on their similarity to one of the parents. Others generated assemblies without using the parental data then fixed any problems by using the parental genomes and a technique called "graph-based phasing." The second approach was better.

- All of the final assemblies were contaminated with varying amounts of E. coli and yeast DNA or and/or various adaptor DNA sequences that were not removed by filters. All of them were contaminated with mitochondrial DNA that did not belong in the assembled chromosomes.

- The most common sources of assembly errors were: (1) missing joins where large stretches of DNA should have been brought together, (2) misjoins where two large stretches (contigs) were inappropriately joined, (3) incorrect inversions, and (4) false duplications.

- The overall accuracy of the best assemblies was one base pair error in 100,000 bp (10-5).

- Using the RefSeq database of 27,225 genes, most assemblies captured almost all of these confirmed and probable genes but several hundred were not complete and many were missing.

- No chromosome was complete telomere-telomere (T2T) but most were nearly complete including the complicated centromere and telomere regions.

- The two genomes (parental and maternal) differed at 2.6 million SNPs (single nucleotides), 631,000 small structural variations (<50 bp), and 11,600 large structural variations (>50 bp).

- The consortium used the best assembly algorithm to analyze the genomes of an additional 47 individuals. They began with the same coverage used for HG002; namely, 35X coverage. (Each stretch of DNA was sequenced 35 times on average - about equal amounts in both directions.) This was not successful so they had to increase the coverage to 130X to get good assemblies. They estimate that each additional diploid sequence will reguire 50-60X coverage. This kind of coverage would have been impossible in the 1990s when the first human genome was assembled but now it's fairly easy as long as you have the computer power and storage to deal with it.

Junk DNA vs noncoding DNA

The Wikipedia article on the Human genome contained a reference that I had not seen before.

"Finally DNA that is deleterious to the organism and is under negative selective pressure is called garbage DNA.[43]"

Reference 43 is a chapter in a book.

Pena S.D. (2021) "An Overview of the Human Genome: Coding DNA and Non-Coding DNA". In Haddad LA (ed.). Human Genome Structure, Function and Clinical Considerations. Cham: Springer Nature. pp. 5–7. ISBN 978-3-03-073151-9.

Sérgio Danilo Junho Pena is a human geneticist and professor in the Dept. of Biochemistry and Immunology at the Federal University of Minas Gerais in Belo Horizonte, Brazil. He is a member of the Human Genome Organization council. If you click on the Wikipedia link, it takes you to an excerpt from the book where S.D.J. Pena discusses "Coding and Non-coding DNA."

There are two quotations from that chapter that caught my eye. The first one is,

"Less than 2% of the human genome corresponds to protein-coding genes. The functional role of the remaining 98%, apart from repetitive sequences (constitutive heterochromatin) that appear to have a structural role in the chromosome, is a matter of controversy. Evolutionary evidence suggests that this noncoding DNA has no function—hence the common name of 'junk DNA.'"

Professor Pena then goes on to discuss the ENCODE results pointing out that there are many scientists who disagree with the conclusion that 80% of our genome is functional. He then says,

"Many evolutionary biologists have stuck to their guns in defense of the traditional and evolutionary view that non-coding DNA is 'junk DNA.'"

This is immediately followed by a quote from Dan Graur, implying that he (Graur) is one of the evolutionary biologists who defend the evolutionary view that noncoding DNA is junk.

I'm very interested in tracking down the reason for equating noncoding DNA and junk DNA, especially in contexts where the claim is obviously wrong. So I wrote to Professor Pena—he got his Ph.D. in Canada—and asked him for a primary source that supports the claim that "evolutionary science suggests that this noncoding DNA has no function."

He was kind enough to reply saying that there are multiple sources and he sent me links to two of them. Here's the first one.

I explained that this was somewhat ironic since I had written most of the Wikipedia article on Non-coding DNA and my goal was to refute the idea than noncoding DNA and junk DNA were synonyms. I explained that under the section on 'junk DNA' he would see the following statement that I inserted after writing sections on all those functional noncoding DNA elements.

"Junk DNA is often confused with non-coding DNA[48] but, as documented above, there are substantial fractions of non-coding DNA that have well-defined functions such as regulation, non-coding genes, origins of replication, telomeres, centromeres, and chromatin organizing sites (SARs)."

That's intended to dispel the notion that proponents of junk DNA ever equated noncoding DNA and junk DNA. I suggested that he couldn't use that source as support for his statement.

Here's my response to his second source.

The second reference is to a 2007 article by Wojciech Makalowski,1 a prominent opponent of junk DNA. He says, "In 1972 the late geneticist Susumu Ohno coined the term "junk DNA" to describe all noncoding sections of a genome" but that is a demonstrably false statement in two respects.

First, Ohno did not coin the term "junk DNA" - it was commonly used in discussions about genomes and even appeared in print many years before Ohno's paper. Second, Ohno specifically addresses regulatory sequences in his paper so it's clear that he knew about functional noncoding DNA that was not junk. He also mentions centromeres and I think it's safe to assume that he knew about ribosomal RNA genes and tRNA genes.

The only possible conclusion is that Makalowski is wrong on two counts.

I then asked about the second statement in Professor Pena's article and suggested that it might have been much better to say, "Many evolutionary biologists have stuck to their guns and defend the view that most of human genome is junk." He agreed.

So, what have we learned? Professor Pena is a well-respected scientist and an expert on the human genome. He is on the council of the Human Genome Organization. Yet, he propagated the common myth that noncoding DNA is junk and saw nothing wrong with Makalowski's false reference to Susumu Ohno. Professor Pena himself must be well aware of functional noncoding elements such as regulatory sequences and noncoding genes so it's difficult explain why he would imagine that prominant defenders of junk DNA don't know this.

I think the explanation is that this connection between noncoding DNA and junk DNA is so entrenched in the popular and scientific literature that it is just repeated as a meme without ever considering whether it makes sense.

1. The pdf appears to be a response to a query in Scientific American on February 12, 2007. It may be connected to a Scientific American paper by Khajavinia and Makalowski (2007).Khajavinia, A., and Makalowski, W. (2007) What is" junk" DNA, and what is it worth? Scientific American, 296:104. [PubMed]