The Art of Insight, by Alberto Cairo, highlights how designers approach visualization with a wide view.

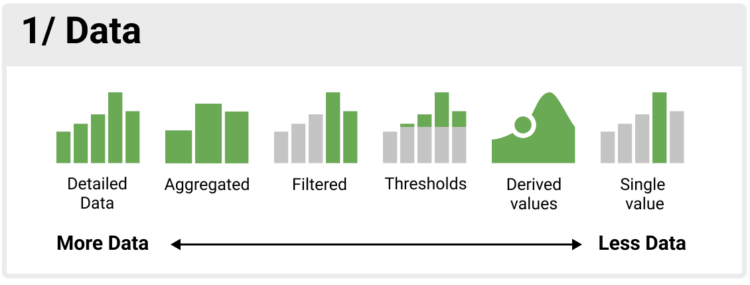

In the narrowest view of data visualization, you use charts to pull quick, quantitative information from dashboards and reports. Take a few steps back and you get exploratory data analysis and then storytelling. Keep going and you get a fluid-like approach to visualization that gives more attention to beauty, emotion, and qualitative insights that are difficult to measure. The Art of Insight, the final book in Cairo’s three-book series, focuses on the more fluid approach.

As a slow reader, I read this surprisingly quick. I enjoyed reading and thinking about the less mechanical side of visualizing data.

Tags: Alberto Cairo, book